OpenStack has been providing block storage replication facilities for a long time through the implementation of Cinder Replication.

There are a number of vendors who have replication capabilities as part of their Cinder drivers, but these have been limited to asynchronous and/or synchronous replication between two different backends.

Yes, you can have multiple replication targets for a primary backend array, which can give the illusion of multi-site replication, but in the current incarnations, a Cinder volume can only be replicated to one target array. Therefore the volume is either synchronously or asynchronously replicated to a single target array because the Cinder “volume type” that controls the volumes replication can only specify one “replication type“.

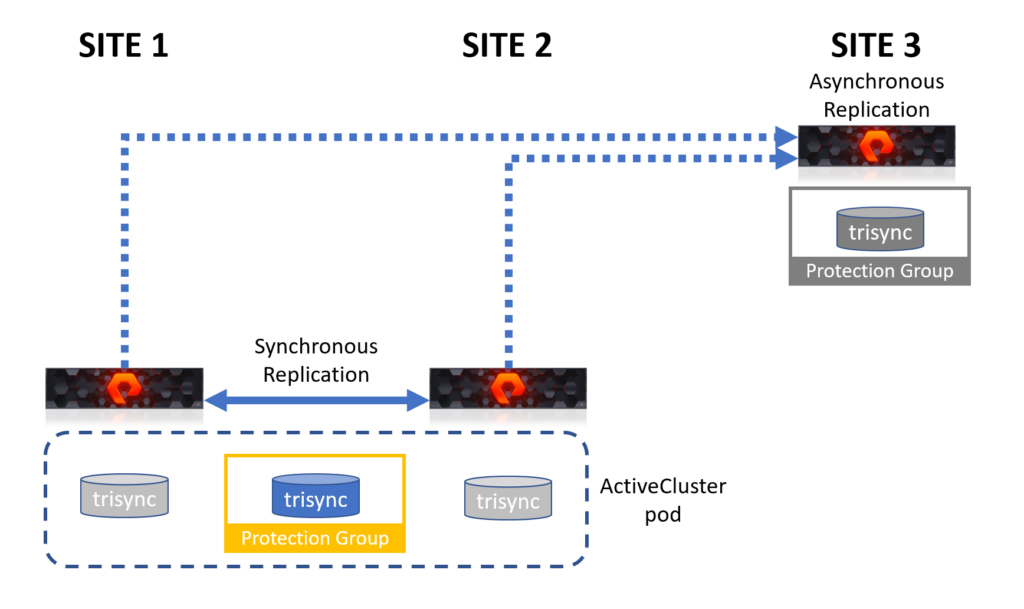

With the release of OpenStack 2023.1 (aka Antelope), Pure Storage have raised the bar by adding a fully featured 3-site replication functionality, using a new “replication type” called “trisync” that allows a single volume to be created and replicated to both a synchronous target and a different asynchronous target concurrently, providing a true 3-site disaster recovery configuration.

This new “replication type” relies on Pure Storage FlashArray’s native active-active-async replication capabilities and just exposes this to OpenStack administrators.

So what is going on in this scenario?

The pre-2023.1 Pure FlashArray Cinder driver had, like most vendor drivers, the ability to replicate volumes synchronously and asynchronously by using the FlashArray constructs of a pod for synchronously replication and a protection group for asynchronous. The driver created the required pod(s) in ActiveCluster mode, or protection group(s), depending on the type of replication the driver was configured to perform.

The new “trisync” replication time uses an ActiveCluster pod to perform the volume synchronous replication to a second array (within latency limits) and then creates a protection group inside the pod that is replicating to a third array, that could be located anywhere in the world (or even in the cloud if the target was a Pure CloudBlockStore). The “trisync” volume is created inside the pod protection group meaning that the volume has been created on all three arrays.

The new functionality allows for a volume to be “retyped” from “trisync” to “sync” and back again at will.

Full support for Cinder consistency groups based on “trisync” volume types is also provided, including the ability to clone a “trisync” consistency group.

This “trisync” replication capability is being downstream backported to IBM’s PowerVC v2.1.1, scheduled for release 2023 Q2, based on OpenStack Yoga. In IBM’s terminology they will refer to this feature as HyperSwap.

Due to OpenStack upstream rules, we can’t backport this functionality from 2023.1 to earlier releases, but if you are interested in a downstream backport, then please reach out to your Pure Storage account team.

How is this 3-site replication actually configured?

The first thing to state is that there are some pretty specific rules around enabling this type of replication.

Within the Cinder configuration file, as part of the main backend stanza, there needs to be two, and only two, replication_device parameters defined. One of these must be of type: sync and the other must be of type: async. Additonally there is a new parameter that should be added to the stanza, that being pure_trisync_enabled = True.

So a full backend stanza for a Pure Storage FlashArray with 3-site replication enabled would be:

[puredriver-1] volume_backend_name = puredriver-1 volume_driver = cinder.volume.drivers.pure.PureISCSIDriver san_ip = <array 1 IP> pure_api_token = <array 1 API> pure_eradicate_on_delete = True replication_device = backend_id:site-2-array,san_ip:<array 2 IP>,api_token:<array 2 API>,type:sync replication_device = backend_id:site-3-array,san_ip:<array 3 IP>,api_token:<array 3 API>,type:async use_multipath_for_image_xfer = True pure_trisync_enabled = True

Now that the backend has been configured with 3-site replication, all that needs to be done is create a Cinder volume type that can be used to create 3-site replicated volumes. This is done using the following commands:

openstack volume type create trisync openstack volume type set --property replication_enabled='<is> True' trisync openstack volume type set --property replication_type='<in> trisync' trisync openstack volume type set --property volume_backend_name=puredriver-1 trisync

Notice that here we are introducing the “replication_type” of “trisync“. This combined with the backend stanza configuration detailed above will ensure all volumes created with this volume type with be 3-site replicated.

As more replication features become available in the Flasharray, we will see about adding these as supported features in the FlashArray Cinder driver as well.

Keep checking back to this blog site for more details as they become availble.