Within the OpenStack framework, storage comes in two main flavours – ephemeral and persistent. Here I’ll be looking at persistent storage and comparing Ceph, software-defined storage (SDS) and the Pure Storage® FlashArray™, an external storage platform.

Why Persistent Storage?

The main OpenStack projects that require storage are Nova, Cinder, Manila, Glance and Swift, each having its specific requirements and features. There could be a blog for each of these projects and their storage use cases, but here I’m largely going to consider Cinder and touch on how this block storage project works in conjunction with Nova and Glance. Manila and Swift are a little more specialised and using block storage for these can be achieved, sometimes with some type of middleware or abstraction.

When talking about Nova’s persistent storage needs these largely fall into two main categories: boot volumes and data volumes. Ceph and Pure Storage can provide ephemeral storage to Nova through simple mount points, but persistent storage is provided through the Cinder project and the vendor drivers for Ceph and Pure Storage.

Glance can use several different storage backends, including file, object and block, to store its catalogue of images required by Nova. In the context of block storage both Ceph and Pure Storage can provide Glance store backing through mount points, but when using Pure Storage the FlashArrays data-reduction technology can provide significant storage capacity savings over the storage requirements for the same group of Glance images than Ceph can.

So Ceph and Pure can be said to offer the same block storage capabilities in OpenStack. Let’s look a little more deeply at why you would choose one over the other.

Ceph: What is it and why does it have such a huge following in the OpenStack world?

The TL;DR; is that Ceph is a scale-out storage system based on an object store. It can also expose block storage and a POSIX-compliant file system, making it a great starting point for any storage requirements in OpenStack, allowing a system to be built with multiple storage capabilities.

Add to that the fact that you can install Ceph for free, it is a bit of a no-brainer when building out your new OpenStack cloud. Anyone wanting to build a small OpenStack cloud on a budget and only have community servers will choose Ceph for their storage layer. Sadly not everyone has a spare Pure Storage FlashArray to play with.

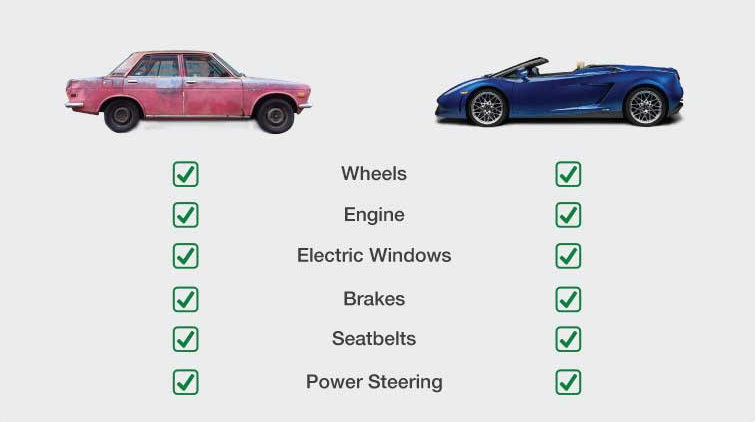

Let’s look at Ceph and compare its OpenStack Cinder features to those of the Flasharry, starting with the OpenStack Cinder Support Matrix (Zed at the time of writing). At first glance, it appears that both Ceph and Pure Storage both support the same features, except for Consistency Groups not being supported by Ceph – not necessarily a major problem. So features are not an issue but checklists have an inherent problem that I will demonstrate here:

So why Ceph or why Pure Storage for your persistent storage in OpenStack?

Architecture

TL;DR; Pure has a much smaller footprint, power and cooling requirements than a Ceph cluster of similar effective capacity and can match or exceed the performance of Ceph.

Simplistically Ceph requires a large number of servers, loaded with storage devices, (HHDs, SSD or even NVMe drives). that link together over a network, usually dedicated, to form a cluster. To scale out Ceph you would, theoretically just add more servers and more disks, but this will then have a knock-on effect on your networking infrastructure requirements. Also note that Ceph can only present block storage to OpenStack cluster tenants over the iSCSI protocol, which ideally should have a dedicated flat network. So each server needs NICs for management, NICs for Ceph cluster communication and NICs for the iSCSI data plane (if the server in question has been configured as an iSCSI gateway). Take note that when you want to scale your Ceph cluster either up or down, beware that you don’t uoset the cluster quorum as that can cause your entire storage cluster to hang. If a node is going bad, please add a replacement before trying to remove the failing one… that will probably remove the quorum-loss issue, but adding nodes is still fraught with risk, especially if software versions don’t exactly match or disk partitions are not exactly the same.

Ceph can be configured for high-performance requirements or capacity requirements, but you can’t decide to change your design once a Ceph cluster has been initiated.

The Pure Storage FlashArray is a single appliance that can scale up its storage capabilities within its defined rack footprint (options are available to provide 73TB effective in 3RU, to 5.5PB effective in 11RU), and can present block storage over iSCSI, Fibre Channel and NVMe protocols. Command and control of the FlashArray are over a shared management network. If more storage is required than the FlashArray is configured to support, additional storage can be added seamlessly with zero disruption, or additional FlashArrays can be configured into OpenStack with very little, or no, impact. You can even upgrade your array model non-disruptively.

Pure Storage has multiple versions of the FlashArray that are optimized for performance, capacity or hybrid workloads, all of which can be easily integrated into Cinder with the required workload targetted to the correct FlashArray backend.

Replication

As we saw in the OpenStack Cinder Support Matrix, both Ceph and Pure Storage, support similar feature sets, but these are not implemented in the same fashion. Let’s look at replication.

Ceph replication is based on splitting the storage cluster into two portions, but these are all in the same cluster, so this cannot cater for a full data center disaster. By splitting the cluster you have also halved the storage capacity of the entire Ceph cluster, which is already reduced by the number of replicas for each storage block in the cluster.

Pure Storage can support asynchronous and synchronous replication across multiple arrays, and there is even tri-sync replication support coming in OpenStack 2023.1 (Antelope). Pure’s replication is to completely separate arrays that can be in the same, metro-distance, or remote data centers, providing complete disaster recovery capability.

Management

From a management perspective, Ceph can be a pretty complex beast, and yes, it can be made to run amazingly fast and highly resilient by organizations such as CERN, but they do have some of the best brains in the world working for them. Not every organization can afford a PhD as their storage admin. Keeping Ceph fed and watered is a full-time job and requires a lot of knowledge in a small number of individuals. Don’t let your Ceph admin ever have a reason to look for another job or you could be in big trouble.

Pure management for OpenStack is as simple as, configuring the array into Cinder and never having to log into the array again. All management and control functions are performed by the Pure Storage Cinder driver and the underlying Purity operating system manages all the flash NAND in the array to provide optimal performance and even manage noisy neighbours without any human intervention. If you want to log onto your Flasharray it provides a simple and intuitive GUI interface with all the data you could need in a few clicks of a mouse and if you want to have a fleet-management view of your FlashArray backends then Pure Storage provides a free, AI-driven, SAAS offering called Pure1 than can even be accessed through a cellphone app. There is also the option of getting performance and capacity telemetry in OpenMetrics format for ingestion into your favourite observability toolset.

Failure Scenarios

You have got through all the hardware and software and Ceph is running nicely for you. You can create boot and data volumes through Cinder and all is good with the world. But now you have to keep it that way. Ceph has a separate management layer, outside of OpenStack, that you need to become competent in, including how to use and configure Prometheus and Grafana to get those all-important performance characteristics that your manager is going to keep asking for. If you are lucky there will be someone in your team who can help bear the load of feeding and watering Ceph, but in many instances, this isn’t the case and you become critical to the Ceph infrastructure and ensuring it keeps performing at optimum levels and hope there are no network outages, disk failures, or even CPU or memory failures. These will cause Ceph to go into rebuild mode and depending on how you configured your data layer this could take days… Worst case, you may even loose quorum and your whole storage cluster could hang!! Enjoy those long weekends and vacations where you are permanently being bugged by your team and manager about issues with performance and capacity!!

Even with Pure Storage your management or support organization can call whilst you are enjoying a family meal or a drink with friends, but with Pure all you have to do is reach for your cell phone and open the Pure1 app to see what is happening to the FlashArray, probably to only discover there is nothing wrong and that the issue you were called about is not related to the storage at all…

If a storage module in a Pure Storage FlashArray fails the array will self-heal faster than you can imagine. Sometimes it will be healed before you can even switch the GUI to the page where the array parity level is exposed – it will already be back at 100%. You can fail at least two storage modules at any one time, but after the array is back to parity you can keep failing modules until you get to the point where the data on the array equals the remaining useable capacity of the array. Note there will be no perceptible degradation in performance, even when the array is running at 100% capacity. Even the failure of a controller will not affect the performance of the array.

Yes, if there are network or compute issues external to the FlashArray your applications may experience performance degradation, loss of paths to devices, etc., but once these are fixed the application performance will immediately come back. Ceph will still be in rebuild mode even after issues are resolved especially if the failure was in any part of the complex infrastructure supporting the Ceph cluster.

If you fail a storage module in a Pure Storage FlashArray the array will self-heal faster than you can imagine. Sometimes it will be healed before you can even switch the GUI to the page where the array parity level is exposed – it will already be back at 100%. You can fail at least two storage modules at any one time, but after the array is back to parity you can keep failing modules until you get to the point where the data on the array equals the remaining useable capacity of the array. Oh and there will be no perceptible degradation in performance, even when the array is running at 100% capacity.

Making the Leap

Several Pure Storage customers have decided to move away from Ceph-backed block storage in their OpenStack environments, due to several reasons, but manageability, stability and scale-out performance are the main reasons.

The question always arises… How do I move my Ceph volumes to Pure Storage in OpenStack?

The answer, as usual, is: It depends…

Not a helpful answer, so I’ll try and expand on this a little. However, the first thing to say is that the main “it depends” is regarding whether the volume is to be migrated in a data volume or a boot volume.

Data Volumes

Let’s start with data volumes as these are easy to migrate.

All that is required is to create a new Cinder Volume Type that maps to your new, shiny Pure Storage FlashArray, then you run the openstack volume retype command.

Under the covers what is happening is that Nova requests a new volume from the target backend, mounts that to the Nova instance and then performs a dd to move the data to the new volume. On completion of the dd, the original volume is unmounted and the UUID of the new volume is switched to match the old volume. Whilst this is going on all IO to the volume mount point is suspended by Nova, so applications may experience a delay or pause.

Boot Volumes

Boot volumes are a little more complex to migrate, purely because the Nova instance is continuously using this volume, therefore you have to choose from three migration paths and which you choose will depend on your risk appetite, method the original instance and boot volume were created and the OpenStack services you have available to you.

So let’s look at the three options:

- Delete the instance, migrate the volume to the new backend (using

openstack volume retype) and recreate the instance, this time using the migrated volume on the new backend. Note that this does require that the original boot volume had been createddelete_on_termination=falseso that it doesn’t get automatically deleted when the instance is deleted. - Create a snapshot of the current boot volume, then create a new glance image from this snapshot. Finally, create a new volume on the new backend using this snapshot-created image.

- Use a backup of the original boot volume and create a new volume from this backup on the new backend, but this requires that you have the

cinder-backupservice configured and running in your OpenStack cluster.

Summary

Ceph has amazing adoption in the OpenStack world, but it does have its issues, especially as your OpenStack cluster scales from development to production levels. Then you may start to experience issues and consider moving to an external storage array.

If power, rack space, cooling, performance and ease of management are important to you, then consider the Pure Storage FlashArray as an alternate or even parallel solution to Ceph.

Migrating your existing Ceph volumes to another backend is not as hard as you might imagine – it will just take a bit of planning.

“Also note that Ceph can only present block storage to OpenStack cluster tenants over the iSCSI protocol, which ideally should have a dedicated flat network. So each server needs NICs for management, NICs for Ceph cluster communication and NICs for the iSCSI data plane (if the server in question has been configured as an iSCSI gateway). ”

Ceph presents block storage via RBD not iSCSI. iSCSI is an optional transport protocol the Ceph cluster can be configured to use.

It is also reasonable to assume that given how common place 25,50,100GbE networking is now (this is the high end of 7+ years ago) using virtual functions that hang off a fast NIC is expected. The physical footprint ends up being 2 NICs running in LACP aggregation to a switch fabric and the virtual functions have the appropriate VLAN tag assigned created a dedicated virtual “NIC” to the data/management/access layers. Almost all enterprise network cards support some sort of resource partitioning/cos/qos that can be assigned to the vlans/vfs allowing for predictable and consistent network performance.

“Ceph replication is based on splitting the storage cluster into two portions, but these are all in the same cluster, so this cannot cater for a full data center disaster. By splitting the cluster you have also halved the storage capacity of the entire Ceph cluster, which is already reduced by the number of replicas for each storage block in the cluster.”

This is a Ceph cluster configured in a stretch or metro-DR mode. You are ignoring the mirroring capabilities that allow for asynchronous replication between different ceph clusters via rbd-mirror, cephfs-mirror and cephrwg multisite.

https://docs.ceph.com/en/latest/rbd/rbd-mirroring/

https://docs.ceph.com/en/latest/cephfs/cephfs-mirroring/

https://docs.ceph.com/en/latest/radosgw/multisite/